industries

technology

Navigating the AI Anxiety Era: Disruption, Human Capital, and the Data Dilemma

By Ang

April 20, 2025

10 min read

This article explores the growing phenomenon of AI anxiety, the widespread concern about artificial intelligence’s impact on jobs, ethics, and human relevance. It argues that unlike past technological revolutions, AI poses a unique disruption to cognitive labor, accelerating uncertainty in both high and low skilled industries.

The Rise of AI Anxiety

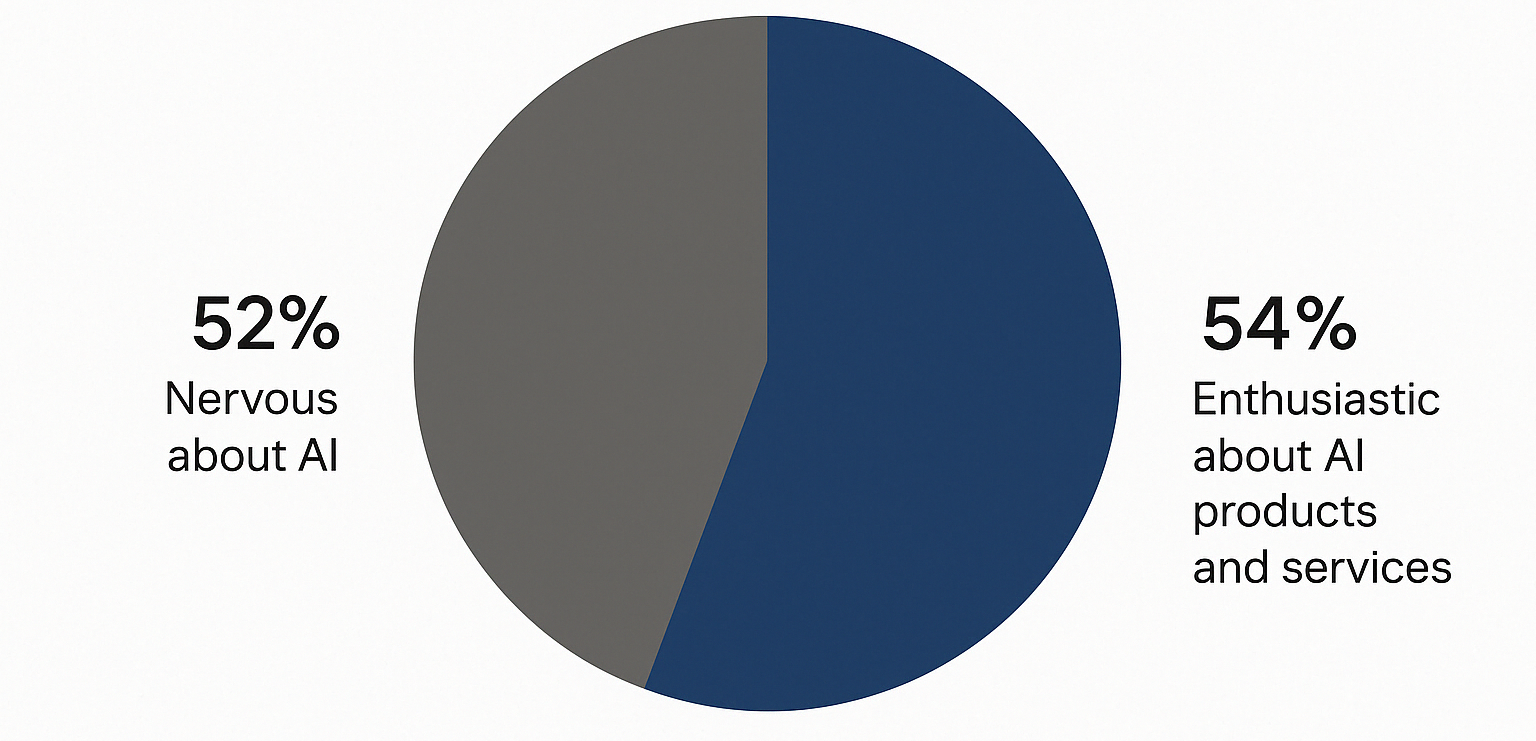

Global surveys reveal that anxiety about artificial intelligence has reached unprecedented levels, with 52% of adults reporting nervousness about AI products and services a figure that has increased more significantly than any other AI-related measure in recent years (Ipsos, 2023). This collective apprehension exists in near-perfect tension with excitement about AI's potential (54%), creating a sociopsychological phenomenon that warrants serious academic and policy attention. In high-income countries, skepticism toward AI applications significantly exceeds the enthusiasm commonly found in emerging markets, suggesting that proximity to technological integration intensifies rather than alleviates concerns (Ipsos, 2023).

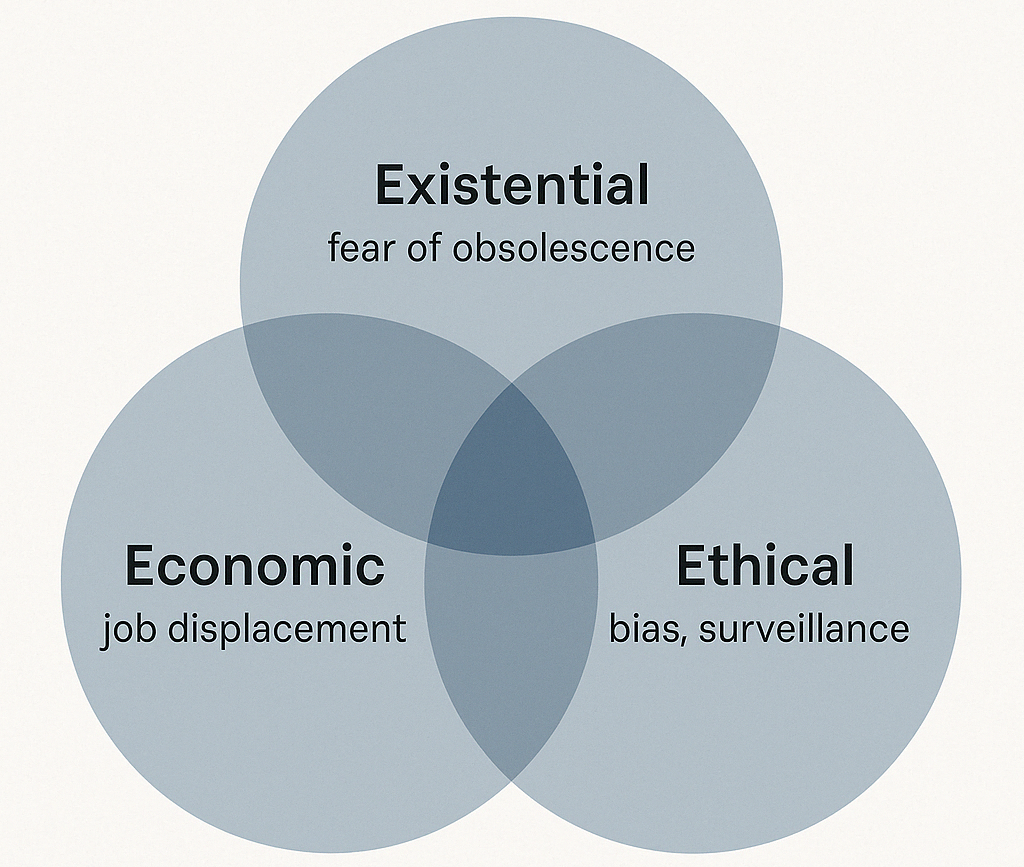

The psychological dimensions of this anxiety extend beyond superficial technophobia, representing instead a rational response to unprecedented technological change. According to research by the Oxford Internet Institute (2024), these concerns manifest along three primary dimensions: existential (fear of human obsolescence), economic (job displacement anxiety), and ethical (concerns about algorithmic bias and surveillance). This multifaceted anxiety has become increasingly pronounced as AI systems demonstrate capabilities previously considered uniquely human, such as creative expression, emotional recognition, and complex decision-making (Brynjolfsson and McAfee, 2023).

The urgency of addressing these concerns has escalated as AI systems rapidly integrate into critical infrastructure, employment structures, and daily decision-making. EY's global survey (2023) found that 76% of employees believe AI will fundamentally transform their industries within five years, with 41% expressing high levels of anxiety about their preparedness for this transition. As Acemoglu and Restrepo (2022, p.198) argue, "The societal response to this technological transition will determine whether AI becomes a force for broad prosperity or concentrated advantage," highlighting the stakes of effectively navigating this period of accelerated change.

Technological Disruption: A Historical Perspective

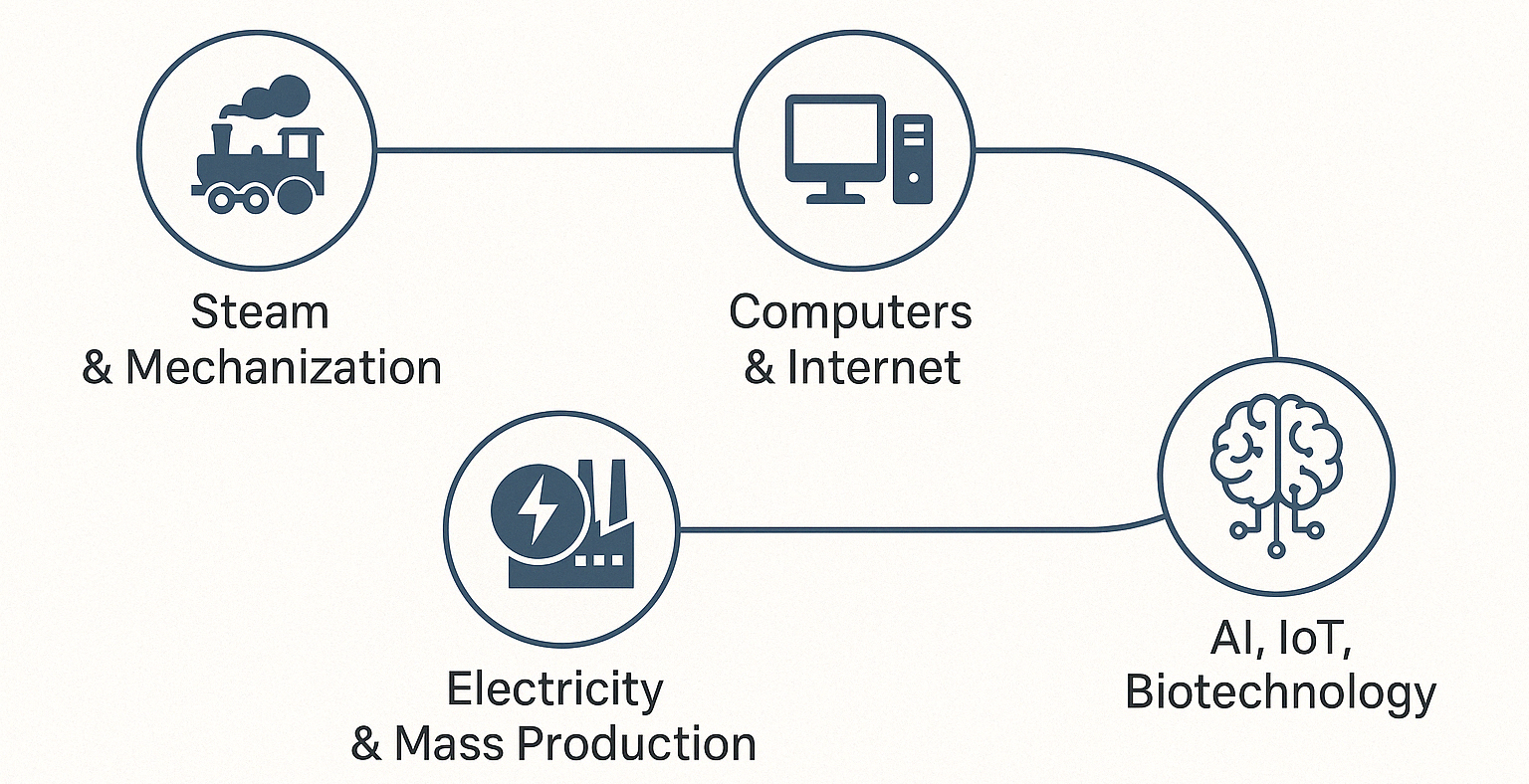

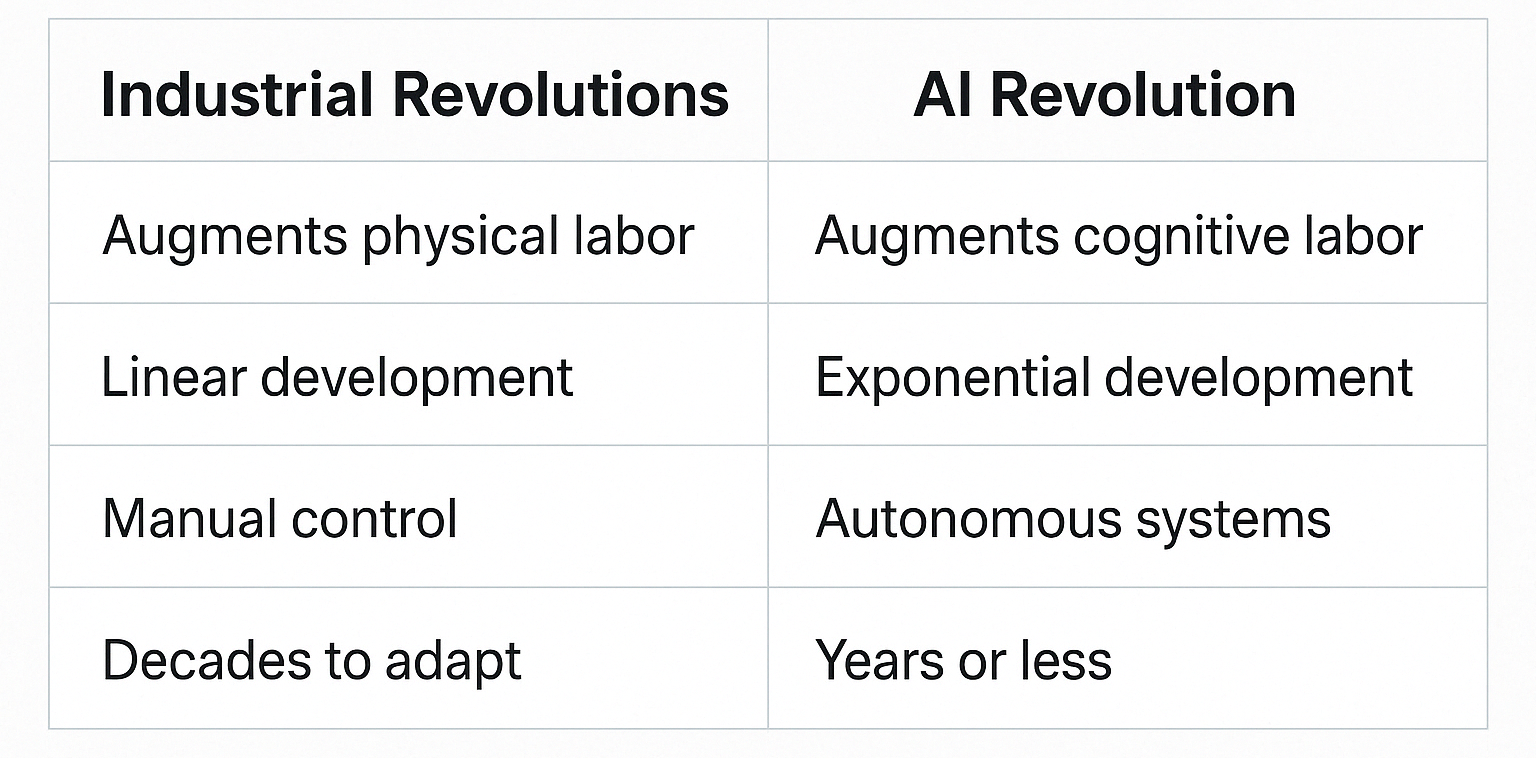

The evolution of technological disruption has followed a trajectory of increasing abstraction and cognitive enhancement. While the First Industrial Revolution mechanized production through steam power and the Second introduced electrical power and mass production, today's Fourth Industrial Revolution (Industry 4.0) represents a fundamental paradigm shift by integrating AI, IoT, biotechnology, and nanotechnology into all aspects of society and industry (Schwab, 2023). Unlike previous industrial revolutions that primarily augmented human physical capabilities, AI technology demonstrates unparalleled potential to replicate, and eventually surpass, human cognitive functions.

This transition from physical to cognitive augmentation introduces novel challenges related to human value, identity, and economic participation. Historical analysis by the McKinsey Global Institute (2023) indicates that previous technological revolutions ultimately created more jobs than they eliminated but with significant transitional disruption and distributional consequences. The mechanization of agriculture in the early 20th century, for instance, reduced farming employment from 40% of the U.S. workforce to under 2% today, necessitating massive occupational migration that occurred over generations rather than years.

Comparative analysis reveals that while previous technological revolutions displaced specific categories of manual labor, AI's impact spans vertically across skill hierarchies and horizontally across industry sectors. Netflix's disruption of Blockbuster through sophisticated recommendation algorithms exemplifies how AI-driven models can fundamentally transform business paradigms and consumer behavior patterns (Sharma, 2024). The financial services sector provides an equally instructive example, with AI-powered robo advisors managing over $1.4 trillion in assets globally a figure projected to reach $7.7 trillion by 2028 according to Boston Consulting Group (2024).

What distinguishes AI from previous technological disruptions is not merely its scope but its capacity for self-improvement a characteristic that accelerates implementation timelines and amplifies both potential benefits and risks. OpenAI's GPT series demonstrated this trajectory, with GPT-4 exhibiting capabilities that would have seemed implausible just five years earlier. This accelerating pace of improvement creates compressed adaptation timelines that tax institutional and individual capacities for change management. Current concerns about AI-driven disruption are therefore qualitatively different from historical precedents, necessitating more robust anticipatory governance frameworks.

The Human Capital Crossroads

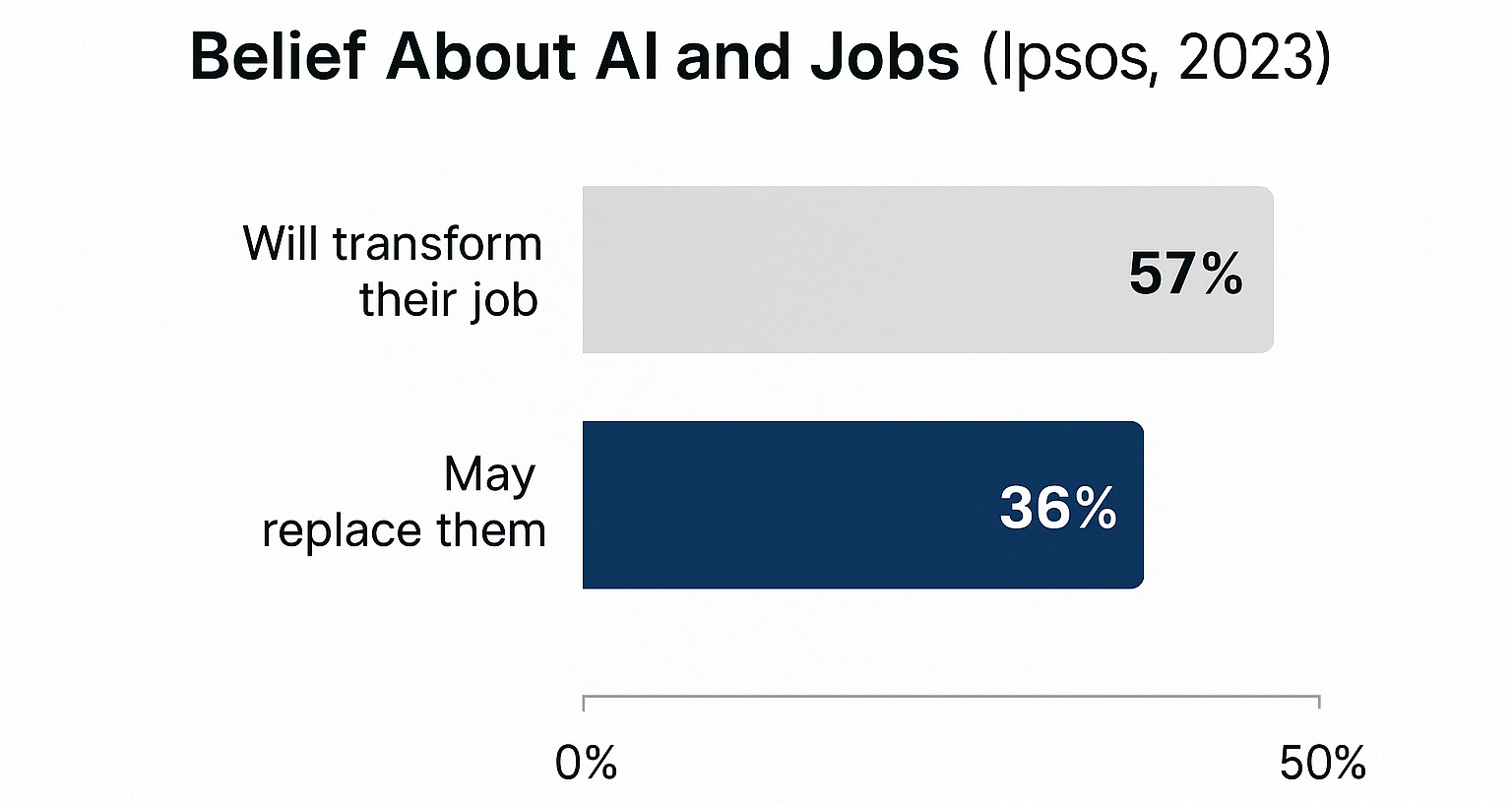

The workforce implications of AI adoption are profound and increasingly quantifiable. Global survey data indicates that 57% of workers anticipate AI will transform their current job functions, while 36% expect it will replace their positions entirely (Ipsos, 2023). The World Economic Forum's Future of Jobs Report (2024) projects that 85 million jobs may be displaced by automation by 2025, while 97 million new roles may emerge at the human-technology interface. This anticipated disruption is not uniformly distributed; knowledge workers, middle management, and those engaged in routine cognitive tasks face disproportionate exposure to automation risk.

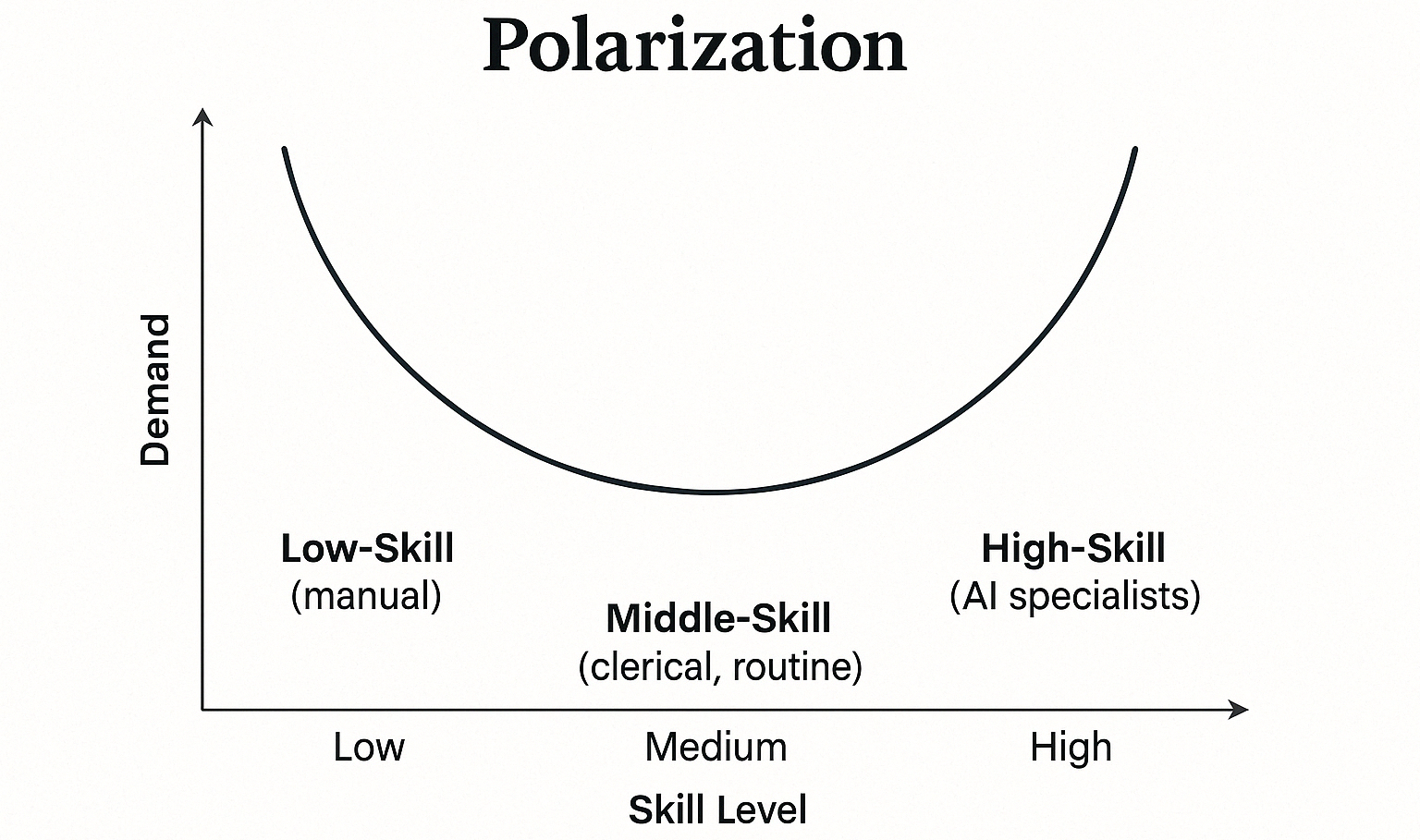

The OECD Skills Outlook (2023) identifies a growing "skills polarization" wherein demand increases for both highly specialized technical roles (AI engineers, data scientists) and distinctly human capabilities (creative problem-solving, ethical judgment, interpersonal communication) while eroding middle-skill occupations. This bifurcation creates what labor economists term a "hollowing out" effect that threatens social mobility pathways and contributes to income inequality. The International Monetary Fund (2023) estimates that approximately 60% of jobs in advanced economies will be significantly impacted by AI, with 26% facing high exposure to automation.

Beyond employment statistics, the psychological impact of AI integration extends into deeper patterns of technological dependence. Research indicates that individuals with social anxiety are particularly susceptible to over reliance on conversational AI systems, with 65% of users reporting that virtual assistants have already altered their behaviors and daily routines (Hu et al., 2023). This finding suggests that AI technologies may both reflect and amplify existing psychological vulnerabilities while simultaneously reconfiguring social interaction patterns at scale.

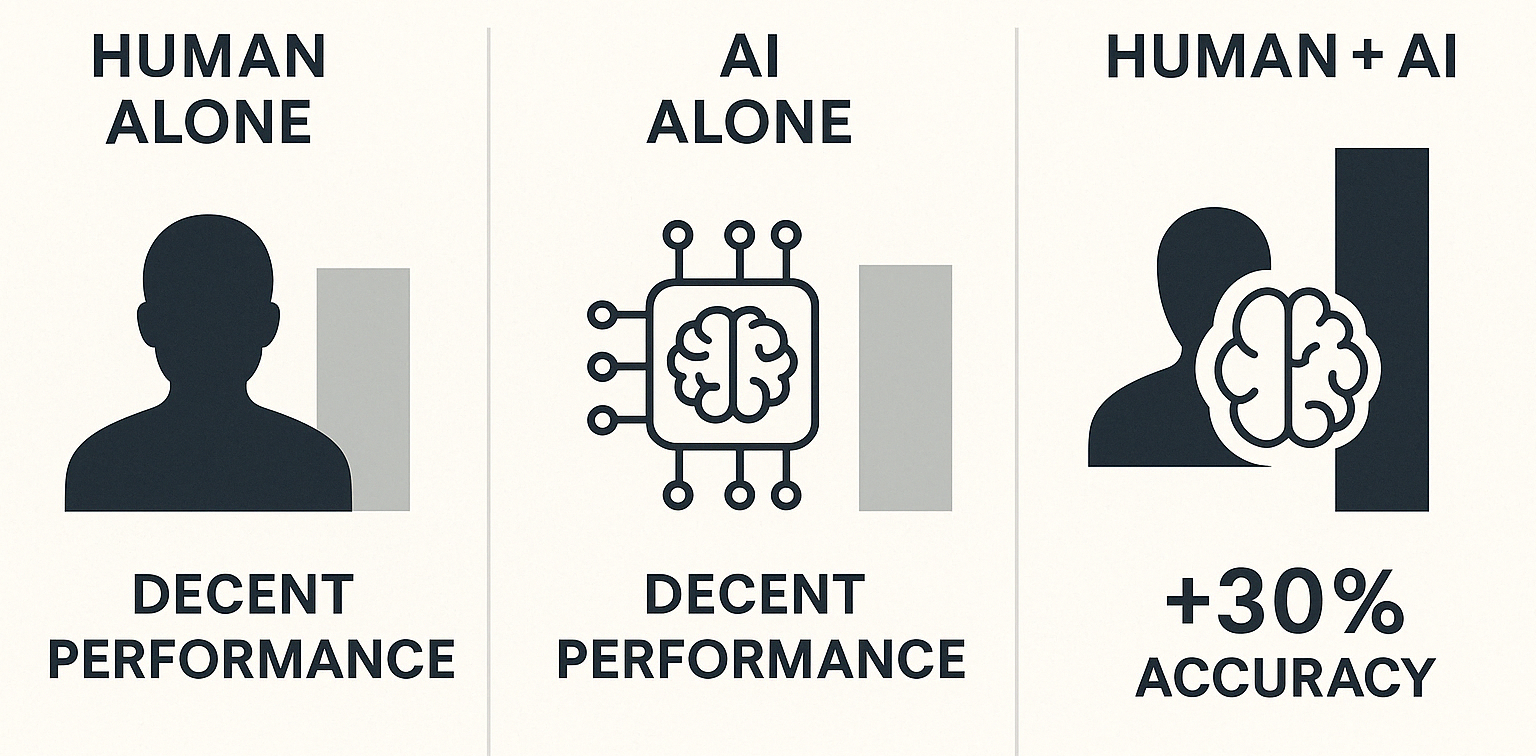

Emerging research from MIT's Task Force on the Work of the Future (2024) identifies "AI complementarity" as the critical factor in successful human capital development strategies. Occupations that effectively combine human judgment with AI capabilities demonstrate both higher productivity and greater resistance to full automation. For instance, radiologists utilizing AI diagnostic tools have demonstrated 30% higher accuracy rates than either humans or AI working independently (Lancet Digital Health, 2023). The challenge for organizational leadership extends beyond workforce restructuring to encompass the cultivation of healthy human-AI relationships that enhance rather than diminish human capabilities.

The Strategic Power of Data

Data has emerged as the critical strategic asset underpinning effective AI deployment, with profound implications for competitive advantage, privacy protection, and ethical implementation. The European Data Market study (European Commission, 2023) values the EU data economy at €829 billion, representing 5.8% of EU GDP, while projecting growth to €1.3 trillion by 2028. This economic valuation reflects data's role as the fundamental resource fueling AI system development and deployment.

Organizations must navigate increasingly complex regulatory environments while collecting, processing, and leveraging the massive datasets required for AI system development. The European Union's General Data Protection Regulation (GDPR) has established global precedents for data governance through requirements such as Data Protection Impact Assessments for AI technologies that process personal information (Fieldfisher, 2024). These assessments evaluate privacy risks, data minimization strategies, and algorithmic transparency establishing accountability mechanisms that balance innovation with individual rights.

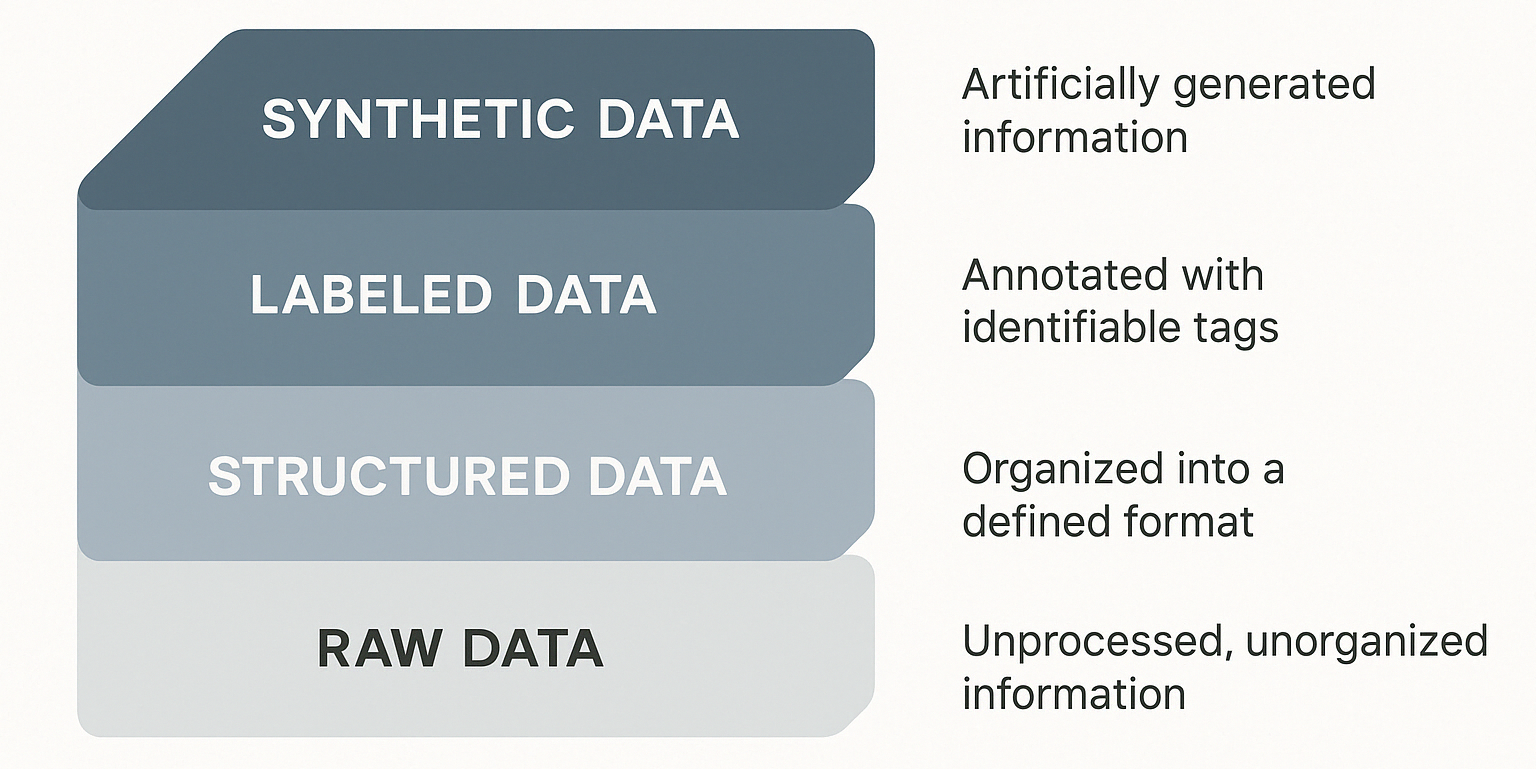

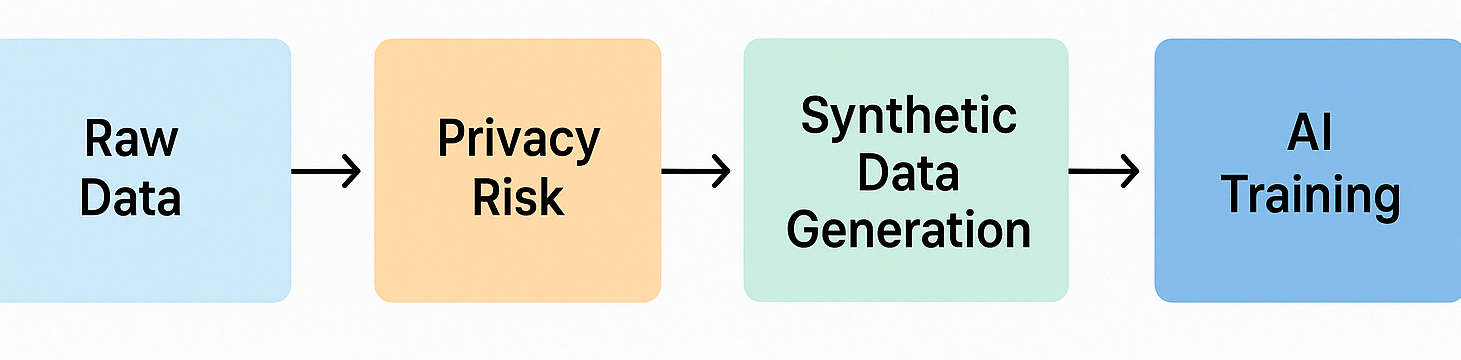

The distinction between data types significantly impacts both strategic value and regulatory requirements. Synthetic data generation, which creates artificial datasets that maintain statistical properties without containing actual personal information, has emerged as a critical technology for AI development. According to Fortune Business Insights (2023), the synthetic data generation market is projected to reach $3.2 billion by 2030, representing a 35% compound annual growth rate. Similarly, data labelling the process of annotating raw data to create structured training sets has developed into a specialized industry valued at $1.7 billion globally (Grand View Research, 2023).

The quality, diversity, and ethical procurement of training data fundamentally determines AI system performance and fairness. Research by MIT and Stanford (Zhang et al., 2023) demonstrates that algorithms trained on biased or unrepresentative datasets perpetuate and sometimes amplify existing social inequities. Financial institutions implementing AI-driven fraud detection systems, for instance, must balance the need for comprehensive transaction datasets against privacy considerations and potential discriminatory outcomes. This balancing act requires sophisticated technical infrastructure alongside nuanced ethical frameworks—a combination that remains elusive for many organizations despite its strategic importance.

National data governance strategies have emerged as critical components of economic competitiveness in AI development. Singapore's Personal Data Protection Act (PDPA) and India's Digital Personal Data Protection Act (DPDPA) exemplify regional approaches that adapt international best practices to local contexts and development priorities. The World Bank (2023) identifies data infrastructure development as a priority investment area for emerging economies seeking to participate meaningfully in the AI driven economy, highlighting the strategic importance of data sovereignty and governance frameworks.

Bridging the Gap: A Call for AI-Ready Strategies

Successful AI implementation requires multidimensional preparedness strategies that address technological, human capital, and governance dimensions simultaneously. Analysis of high-performing organizations reveals that effective AI adoption correlates strongly with clear strategic objectives, robust change management processes, and explicit ethical frameworks (Jha, 2024). Conversely, implementation failures such as IBM's Watson for Oncology and Microsoft's Tay chatbot demonstrate the consequences of inadequate testing, insufficient ethical guardrails, and misalignment between technical capabilities and organizational needs. These contrasting outcomes underscore the importance of comprehensive preparation rather than reactive adaptation.

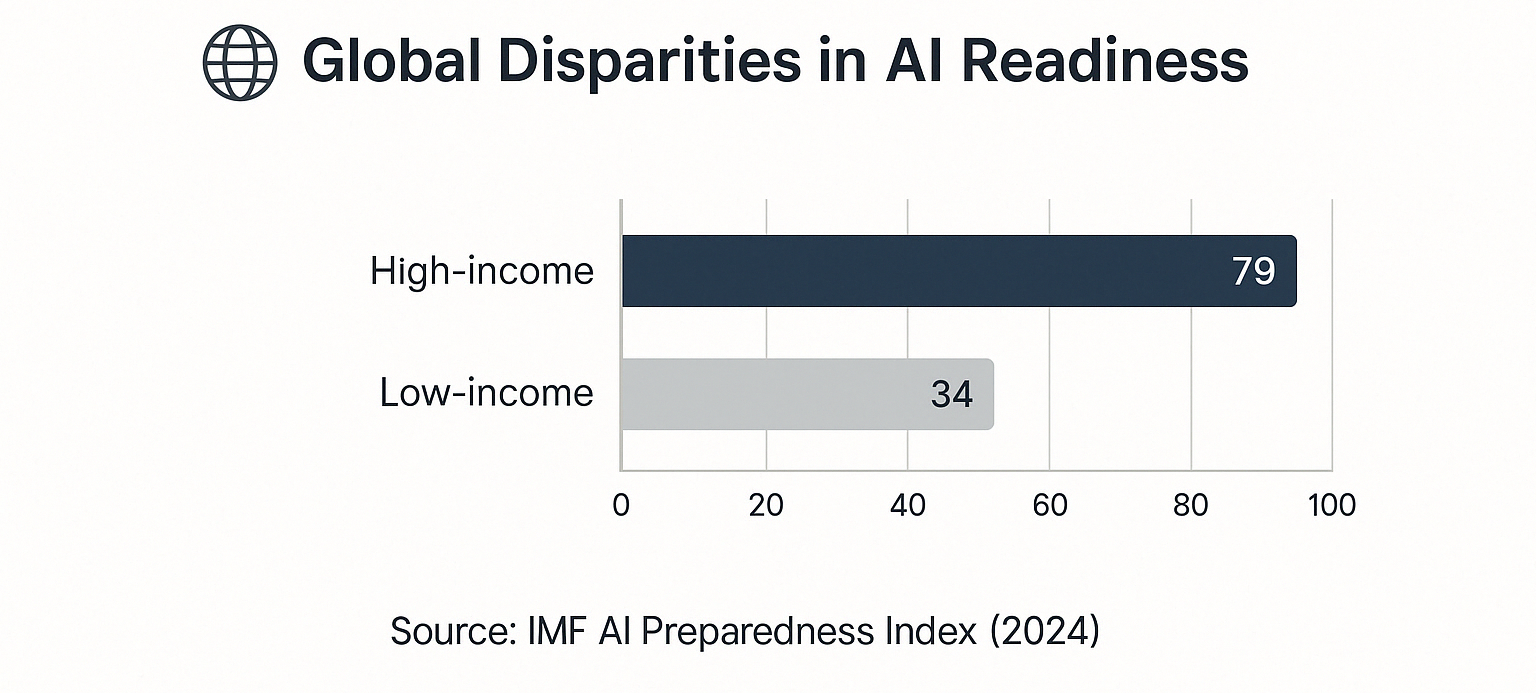

The IMF's AI Preparedness Index (2024), measuring 174 nations across dimensions including digital infrastructure, human capital, innovation ecosystems, and regulatory frameworks, reveals significant disparities in readiness. High-income countries score an average of 79 out of 100, compared to just 34 for low-income nations, suggesting that without intervention, AI may exacerbate rather than reduce global inequality. This digital divide requires coordinated international action to ensure technological benefits are broadly distributed a challenge recognized in the OECD's AI Policy Observatory recommendations (2023).

Organizations should implement structured AI-readiness assessments that evaluate technical infrastructure, data quality, workforce capabilities, and governance mechanisms before significant AI investments. The Harvard Business Review's AI Readiness Framework (2023) identifies seven critical dimensions for organizational assessment: strategic alignment, data infrastructure, technical capabilities, workforce skills, ethical guidelines, implementation processes, and performance metrics. These assessments should identify capability gaps across dimensions including data privacy compliance, algorithmic transparency, and human-AI collaboration potential.

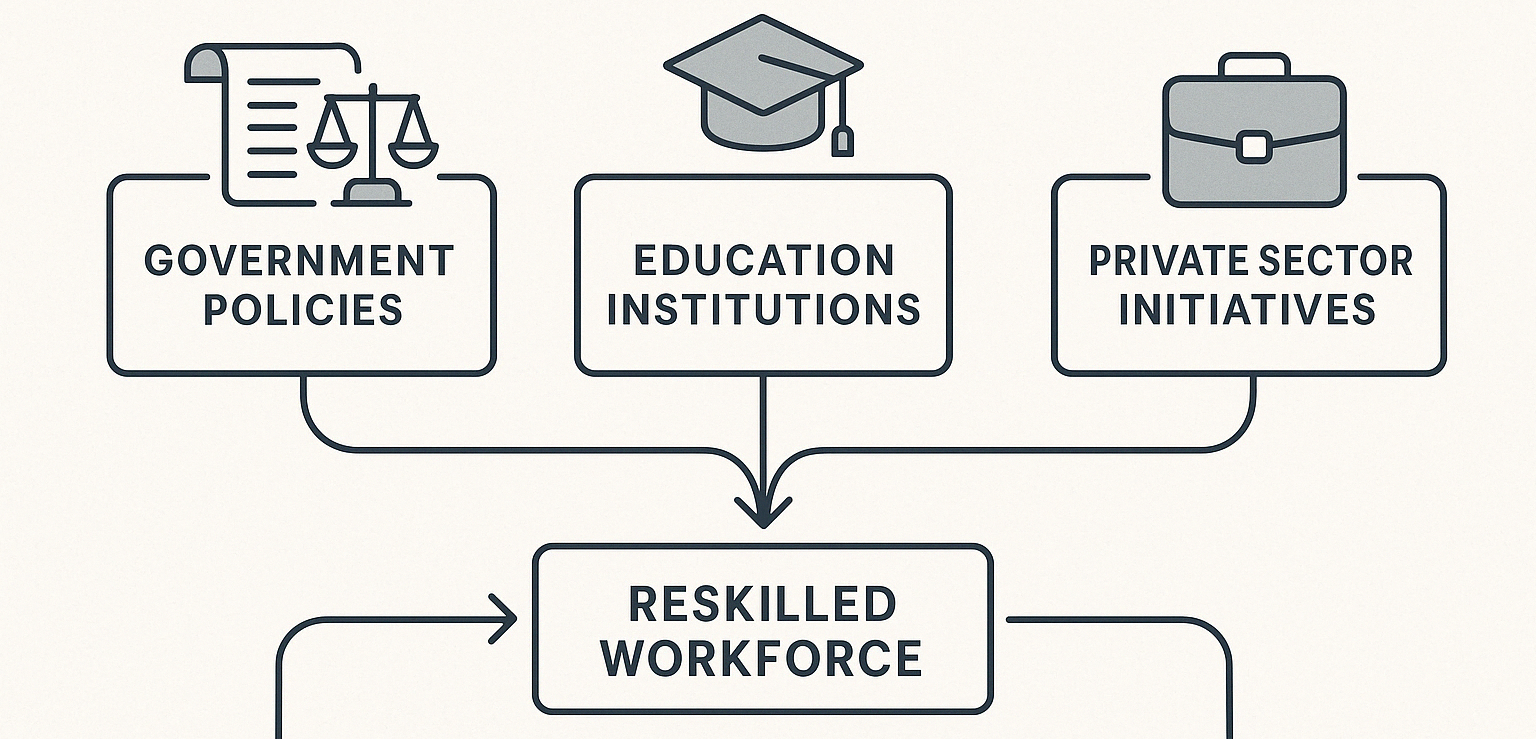

Simultaneously, public policymakers must develop inclusive digital skills strategies that democratize access to AI literacy while establishing regulatory frameworks that encourage innovation within ethical boundaries. The European Union's Digital Skills and Jobs Coalition represents an exemplary approach, combining public funding for education programs with private sector commitments to train and hire digital talent. Educational institutions must evolve curricula to emphasize the uniquely human capabilities least susceptible to automation complex problem solving, ethical reasoning, and creative collaboration while cultivating technical fluency appropriate to diverse career pathways.

For individuals, developing an "AI-complementary" skill portfolio represents the most effective adaptation strategy. Research from Google's People + AI Research initiative (2023) identifies three capability categories with enduring value: creative intelligence (problem framing and novel solution development), social intelligence (team leadership, negotiation, and emotional understanding), and physical dexterity coupled with spatial awareness. Financial services professionals, for instance, increasingly complement technical expertise with sophisticated client relationship management and ethical decision-making capabilities skills that remain challenging for AI systems to replicate.

Conclusion: From Fear to Foresight

The anxiety surrounding artificial intelligence represents both legitimate concern and transformative potential. By acknowledging rather than dismissing these concerns, stakeholders can develop more thoughtful, inclusive approaches to AI integration that maximize benefits while minimizing disruption. The historical perspective on technological change demonstrates that periods of anxiety often precede periods of unprecedented productivity and innovation but only when accompanied by appropriate social adaptations and governance frameworks. The current moment demands similar adaptive capacity, directed not toward preventing technological advancement but toward shaping its implementation to enhance human capability and dignity.

For organizations, the strategic imperative is clear: transform AI anxiety from an implementation barrier into a catalyst for thoughtful design and deployment. The MIT Sloan Management Review (2023) finds that companies explicitly addressing employee concerns about AI through transparent communication and collaborative implementation achieve 42% higher adoption rates and 27% greater productivity gains than those pursuing purely technical solutions. Similarly, McKinsey's global AI survey (2024) indicates that organizations combining technological investment with comprehensive reskilling initiatives realize three times the return on investment compared to those focusing exclusively on technology deployment.

For individuals, the path forward involves developing complementary capabilities that enhance rather than compete with artificial intelligence. The World Economic Forum (2024) projects that professionals who effectively integrate AI tools into their workflows will experience productivity gains of 30-40%, creating substantial advantages over those who either resist technology adoption or fail to maintain distinctly human capabilities. This complementary relationship requires both technical fluency and metacognitive awareness understanding not only how to use AI systems but when their application is appropriate and beneficial.

For policymakers, success requires balancing innovation incentives with robust protections for human autonomy and dignity. The European Union's AI Act provides a regulatory template that classifies AI applications by risk level and imposes proportionate obligations, while Singapore's AI Governance Framework offers principles-based guidance that maintains regulatory flexibility. These complementary approaches demonstrate that effective governance need not impede innovation but can instead channel technological development toward socially beneficial outcomes.

By reframing anxiety as constructive caution and channeling it toward proactive preparation, stakeholders across the ecosystem can navigate the transformative potential of artificial intelligence while preserving and enhancing the unique value of human contribution. In the words of MIT economist Daron Acemoglu (2023, p.17), "The path of technological development is not predetermined it reflects our collective choices about which problems to solve and which values to prioritize."

The choice between disruption and downfall is not predetermined it remains ours to make through thoughtful, inclusive, and forward-looking governance of this powerful technology.

References

- Acemoglu, D. and Restrepo, P. (2022) 'Artificial Intelligence, Automation and Work', in Agrawal, A., Gans, J. and Goldfarb, A. (eds.) The Economics of Artificial Intelligence: An Agenda. Chicago: University of Chicago Press, pp. 197-236.

- Boston Consulting Group (2024) Global Asset Management 2024: Navigating the AI Revolution, Boston Consulting Group, Boston.

- Brynjolfsson, E. and McAfee, A. (2023) The AI Dilemma: Augmentation, Automation, and the Future of Work. MIT Press, Cambridge.

- European Commission (2023) The European Data Market Study 2021-2023, Publications Office of the European Union, Luxembourg.

- Fieldfisher (2024) 'AI & privacy compliance: getting data protection impact assessments right', Fieldfisher Insights, January.

- Fortune Business Insights (2023) Synthetic Data Generation Market Size, Share & COVID-19 Impact Analysis, FBI Market Research, Dublin.

- Grand View Research (2023) Data Labeling Market Size, Share & Trends Analysis Report, Grand View Research, San Francisco.

- Harvard Business Review (2023) 'The AI Readiness Framework: Seven Dimensions of Organizational Preparation', Harvard Business Review, 101(3), pp. 86-94.

- Hu, B. et al. (2023) 'People with social anxiety more likely to become overdependent on conversational artificial intelligence agents', Computers in Human Behavior, 138, pp. 107443.

- International Monetary Fund (2023) World Economic Outlook: AI and the Future of Work, IMF, Washington D.C.

- International Monetary Fund (2024) AI Preparedness Index: Measuring Readiness Across 174 Economies, IMF, Washington D.C.

- Ipsos (2023) 'AI is making the world more nervous', Ipsos Global Advisor Survey, 5 September.

- Jha, D.K. (2024) 'AI: Your Ticket to Disruption OR Your Downfall - The Choice is Yours', LinkedIn Pulse, 4 September.

- Lancet Digital Health (2023) 'Human-AI Collaboration in Medical Imaging: A Systematic Review', The Lancet Digital Health, 5(2), pp. e112-e123.

- McKinsey Global Institute (2023) Jobs Lost, Jobs Gained: Workforce Transitions in a Time of Automation, McKinsey & Company, New York.

- McKinsey Global Institute (2024) The State of AI in 2024: Balancing Promise and Practice, McKinsey & Company, New York.

- MIT Sloan Management Review (2023) 'Beyond Implementation: The Human Factors in AI Adoption', MIT Sloan Management Review, 64(4), pp. 1-12.

- MIT Task Force on the Work of the Future (2024) The Work of the Future in an AI World: Building Better Jobs in an Age of Intelligent Machines, MIT Press, Cambridge.

- OECD (2023) OECD Skills Outlook 2023: The Impact of AI on the Future of Work, OECD Publishing, Paris.

- OECD (2023) Recommendations of the Council on Artificial Intelligence, OECD AI Policy Observatory, Paris.

- Oxford Internet Institute (2024) The Psychology of AI Anxiety: Understanding Public Perceptions and Responses, University of Oxford, Oxford.

- People + AI Research (2023) Human-Centered AI: Guidelines for Human-AI Interaction, Google Research, Mountain View.

- Schwab, K. (2023) The Fourth Industrial Revolution, Currency Press, New York.

- Sharma, R. (2024) 'The Industrial Revolution and the Future of Artificial Intelligence', LinkedIn Pulse, 19 June.

- World Bank (2023) Digital Economy Report: Building Foundations for the AI Economy, World Bank Group, Washington D.C.

- World Economic Forum (2024) The Future of Jobs Report 2024, World Economic Forum, Geneva.

- Zhang, H. et al. (2023) 'Algorithmic Bias and Fairness in Machine Learning: A Systematic Review', Journal of Artificial Intelligence Research, 76, pp. 1213-1279.